What’s in a picture of a system

The etymology of the word system originates from the Greek sum (with) + histanai (a cause to stand) – meaning a cooperative and supportive set-up or a stance or position of multiple interconnected elements.

The Collins dictionary lists the following meanings for the word:

- Way of working

- Set of (working) devices (powered)

- Set of parts to supply water, heat etc.

- Network of things to enable travel or communication

- Body parts that together perform a particular function

- Set of rules for measuring or sorting things

Notice if you will that all these definitions fall into just two categories:

- Structures, or passive arrangements of interconnected elements or things (products)

- Rules, constraints, procedures of interactions causing and controlling structural changes (processes)

The definition from the ’93 Systems Engineering Management (draft) standard (Mil Std 499B) adds another necessary ingredient to the mixture (people):

“System – An integrated composite of people, products, and processes that provide a capability to satisfy a stated need or objective.”

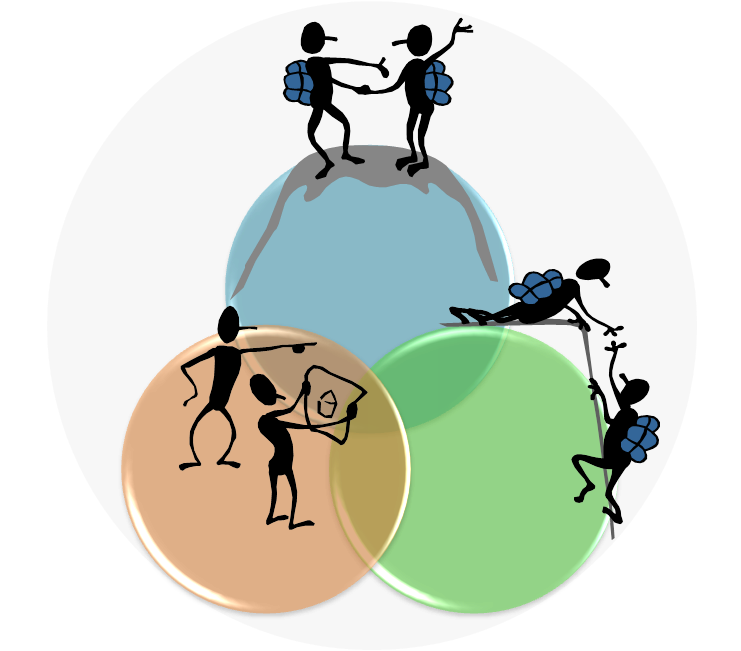

People can meet while doing things they love (like hiking) and maybe strike up a conversation on the top or on the way down the mountain. They may decide to take the next trip together and they may use some products such as maps and hiking tools and other gear to plan and assist during the next hike. On the mutually agreed day, they will engage in the actual conduct of the planned trip, while supporting and helping each other in the unfolding process and through all unforeseen (unplanned) situations they will face. At the end of the successful trip, they may congratulate each other on the job well done and have lunch while they review the “lessons learned” and maybe pitch some ideas for a new hike.

This is in short a typical story of how every, so-called, STS (Socio-Technical System) or organization works. The Kihbernetics Institute is such an organization and our logo is essentially the story of how we work with clients on every unique “hike” we plan and take together.